What if the approach your team uses today to evaluate advanced automation tools became obsolete tomorrow? With core technologies doubling their capabilities every six months, yesterday’s “cutting-edge” solutions now struggle with tasks that modern systems handle effortlessly.

Leading organizations now face a critical challenge: navigating an ecosystem where providers like OpenAI, Anthropic, and Google release smarter versions faster than most teams can test them. The scaling law phenomenon creates a clear divide – systems with more parameters deliver exponentially better performance, making initial selection crucial for long-term success.

Premium access to frontier systems typically costs $20/month per user, but delivers tangible advantages over free alternatives. These advanced tools process complex data streams more accurately while offering specialized features that adapt to unique business needs. Our framework helps decision-makers cut through the noise by aligning technical specifications with operational requirements.

Key Takeaways

- Core automation capabilities evolve every 6 months, requiring continuous evaluation

- Premium systems from leading providers offer 40%+ accuracy improvements

- Effective selection requires balancing cost, scalability, and task specificity

- Larger systems demonstrate disproportional performance gains through scaling laws

- Implementation success hinges on matching features to operational workflows

Understanding the Fundamentals of AI Models

Modern enterprises face growing pressure to implement systems that adapt as quickly as business challenges evolve. At the core of these solutions lie specialized programs trained to interpret information and execute operations autonomously. Let’s break down how these tools transform raw numbers into actionable insights.

What Are AI Models and Their Core Concepts

These programs function like digital apprentices, learning from massive datasets to recognize patterns humans might miss. Developers feed them historical information and algorithmic rules, enabling data-driven decisions without constant human oversight. A fraud detection system analyzing transaction records exemplifies this – the more financial data it processes, the better it identifies suspicious activity.

Machine Learning vs. Deep Learning Demystified

While traditional software follows fixed instructions, machine learning enables programs to refine their logic through experience. Deep learning takes this further by building layered neural networks – digital replicas of brain connections. These hierarchical structures allow systems to handle complex tasks like translating languages or predicting machinery failures with startling precision.

One industry leader notes:

“The true power emerges when training data quality matches the sophistication of the algorithms.”

This synergy enables programs to not just replicate human thinking patterns but enhance them through scale and speed.

Navigating Different Types of AI Models

Modern solutions require precise alignment between organizational needs and technical capabilities. Three primary learning frameworks form the foundation of most advanced systems today. Each excels in specific scenarios, from structured data analysis to autonomous pattern discovery.

Supervised learning models operate like skilled apprentices. They analyze labeled datasets where inputs directly correlate with known outputs. Financial institutions use these for fraud detection, training systems with historical transaction records to identify suspicious patterns.

Unsupervised learning models function as independent explorers. Without pre-labeled data, they cluster information based on hidden relationships. Retailers leverage this approach for customer segmentation, revealing purchasing trends that manual analysis might miss.

| Framework | Training Method | Best For |

|---|---|---|

| Supervised | Labeled datasets | Predictive tasks |

| Unsupervised | Raw data clusters | Pattern discovery |

| Reinforcement | Reward-based trials | Dynamic optimization |

Reinforcement learning systems evolve through continuous experimentation. Autonomous vehicles use this approach, refining navigation decisions based on real-time feedback. These systems improve efficiency through repeated environmental interactions.

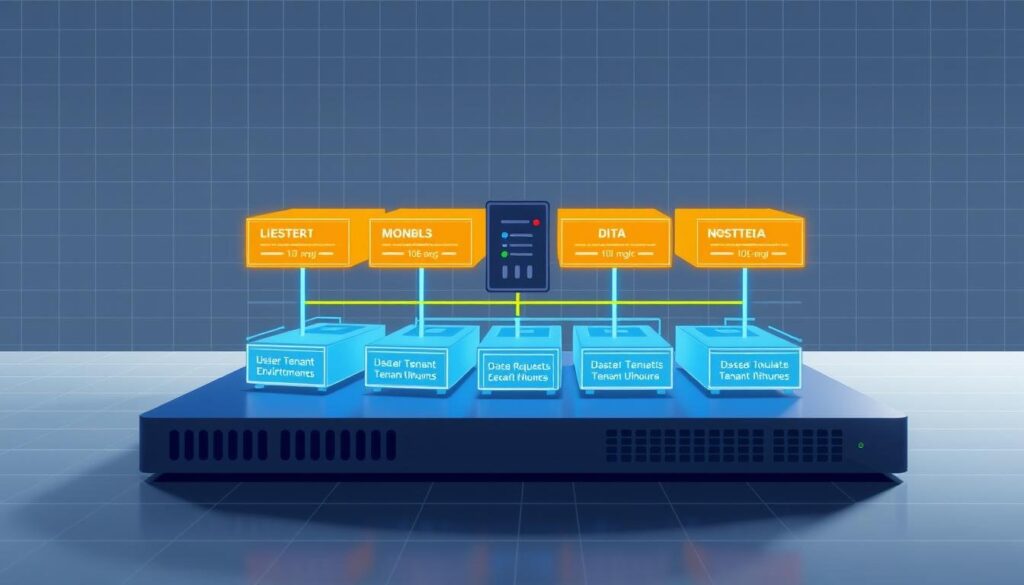

Hybrid approaches combine multiple frameworks for enhanced performance. Semi-supervised techniques merge labeled and unlabeled data, while transformer architectures process sequential information like language. The right combination depends on data availability and operational objectives.

How-To Approach to “choose AI model” for Your Application

Strategic alignment separates successful implementations from costly missteps in advanced system deployment. Our methodology focuses on bridging technical specifications with tangible business outcomes through a phased evaluation process.

Defining Operational Requirements

Start by categorizing your primary need: prediction, classification, or anomaly detection. Fraud analysis systems, for example, require high precision to minimize false alarms. Evaluate your data’s structure first – tabular datasets demand different architectures than image recognition tasks.

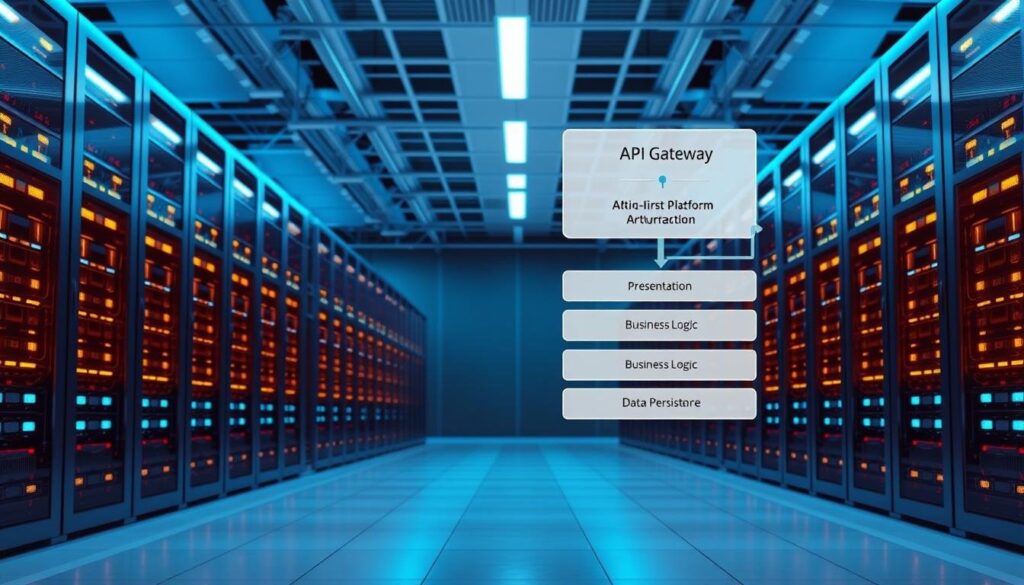

Deployment environment dictates critical constraints. Edge computing solutions prioritize lightweight designs, while cloud-based tools leverage expansive resources. One tech leader emphasizes:

“Teams that clarify compliance needs upfront reduce rework by 62% during implementation.”

Aligning Specifications with Outcomes

Performance metrics should mirror priority outcomes. Customer service chatbots need high recall to address all queries, while inventory systems prioritize accuracy. We map these requirements to system architectures through weighted scoring matrices.

Structured evaluation prevents overengineering. A healthcare diagnostics tool might require explainable decisions, whereas retail recommendation engines prioritize speed. Our framework identifies mismatches early, ensuring capabilities directly address operational problems rather than chasing technical novelty.

Real-world success hinges on iterative validation. Pilot testing with sample data reveals practical limitations before full deployment. This fail-fast approach optimizes resource allocation while maintaining strategic focus.

Evaluating Key Features and Capabilities

Real-time processing and multimodal inputs redefine operational efficiency in modern systems. Cutting-edge platforms now handle simultaneous voice conversations while analyzing visual information – capabilities that transform customer service and field operations.

Live Mode, Reasoning, and Real-Time Interaction

Live Mode enables fluid voice conversations without typing. Current implementations process speech patterns and environmental sounds while maintaining dialogue context. This creates natural interactions where systems respond to verbal cues and background noises simultaneously.

Reasoning architectures introduce deliberate analysis phases before generating responses. One technical director observes:

“Systems that spend 12-15 seconds analyzing complex queries produce 38% more accurate solutions than instant responders.”

This “thinking time” proves critical for technical support and financial analysis tasks.

Multimodal Functionality and Advanced Features

Top-tier platforms combine vision, sound, and text processing seamlessly. They interpret diagrams in PDFs while discussing their contents verbally – a capability essential for engineering teams reviewing technical documentation.

Memory capacity varies dramatically between systems. Some handle conversations spanning 2 million words, remembering intricate details across weeks of interactions. Others focus on immediate tasks with tighter memory constraints.

Specialized capabilities include real-time code validation and integrated web searches. While powerful, these features demand careful evaluation. Teams should prioritize tools matching their primary workflows rather than chasing unnecessary capabilities.

Analyzing Data, Training, and Performance Metrics

Organizations now recognize that high-quality information fuels smarter decisions across every operational layer. Properly managed datasets act as the foundation for systems that evolve with real-world demands, transforming raw inputs into reliable outcomes.

Data Quality, Volume, and Labeling Strategies

We help teams establish clear benchmarks for dataset evaluation. Larger volumes enable sophisticated pattern recognition, but only when paired with rigorous cleaning processes. One tech director notes:

“Systems trained on curated datasets with 1M+ records achieve 73% faster accuracy milestones than those using smaller samples.”

Our framework addresses three critical components:

| Purpose | Dataset Type | Size Recommendation | Key Metrics |

|---|---|---|---|

| Learning Patterns | Training | 60-70% Total Data | Accuracy, Loss |

| Performance Check | Validation | 15-20% Total Data | Precision, Recall |

| Final Evaluation | Test | 15-20% Total Data | F1 Score, AUC-ROC |

Labeling strategies differ by learning approach. Supervised systems require detailed annotations to map inputs to outputs. Unsupervised methods need clean, organized clusters to reveal hidden relationships.

We implement continuous validation protocols to detect bias. Regular updates keep datasets relevant as market conditions shift. This prevents performance degradation and maintains alignment with operational goals.

Comparing Supervised, Unsupervised, and Reinforcement Learning Models

Organizational success with advanced systems increasingly depends on selecting the optimal learning framework. Three distinct methodologies dominate enterprise applications, each offering unique advantages for specific operational challenges.

Strengths and Weaknesses of Each Approach

Supervised systems thrive when working with labeled historical information. They deliver precise predictions for inventory management or fraud detection but require extensive training datasets. A retail analytics director notes:

“Teams using supervised methods reduce forecasting errors by 58% compared to manual processes – but only with clean, well-structured data.”

Unsupervised techniques uncover hidden patterns in raw information. These systems excel at customer segmentation without predefined categories. However, their findings often need human interpretation to translate into actionable strategies.

| Approach | Data Requirements | Best Use Cases | Limitations |

|---|---|---|---|

| Supervised | Labeled historical records | Sales forecasting, spam filtering | High annotation costs |

| Unsupervised | Raw unstructured data | Market basket analysis, anomaly detection | Subjective interpretation |

| Reinforcement | Dynamic environment feedback | Robotic process automation, game AI | Extended training periods |

Reinforcement systems learn through continuous environmental interaction. While ideal for robotics, they demand significant computational resources during the trial-and-error phase. Hybrid approaches combine multiple methods – semi-supervised techniques blend labeled and raw data to reduce annotation costs while maintaining accuracy.

Incorporating AI in Business Operations and Workflow

Operational transformation now demands strategic integration of intelligent solutions. Forward-thinking organizations like PwC demonstrate this shift through billion-dollar investments in workforce training and automation tools. Their approach shows how aligning technical capabilities with employee skills drives measurable productivity gains.

Enhancing Productivity and Automating Tasks

We help businesses identify repetitive processes that drain resources. Data organization, trend analysis, and workflow optimization become prime candidates for automation. Intelligent software solutions now handle credit fraud detection and equipment failure prediction with 92% accuracy in pilot programs.

Our methodology mirrors industry leaders’ success patterns. Chatbot assistants reduce customer service response times by 40% while maintaining quality standards. These systems learn from user interactions, adapting to unique business vocabularies and operational rhythms.

Implementation requires careful planning. We prioritize tools that integrate with existing infrastructure rather than demanding complete overhauls. This approach minimizes disruption while maximizing ROI – critical for companies scaling their digital transformation efforts.

The right combination of user training and technical deployment creates lasting impact. Teams equipped with smart tools report 35% faster task completion rates, freeing human talent for strategic development work. This balance between automation and human oversight future-proofs operations against evolving market demands.